A robust, real-time multiple people tracker in a cluttered environment is important for video

surveillance systems. A significant part of the task is to accurate segment and robustly tracking

the object under the constraint of low computational complexity.

We focus on two major parts: background modeling and real-time tracking. In order to achieve a

robust background model, a novel background adaptation technique is proposed which yields the

appealing properties of background model generation, model adaptation, and frame level model

group switching. The learned background can model a large variation of background changes, such

as a gradual or sudden illumination change, a moving background, and achieves a better

foreground segmentation result. Efficient computation complexity and memory structure are

simultaneously achieved with this online learning procedure. For human tracking, the learned

background models are naturally and effectively embedded in a probabilistic Bayesian framework

called particle filtering, which allows a multi-object tracking. This adaptive background model

incorporated in a multiple tracking system possesses much greater robustness to problematic

phenomena, without sacrificing real-time performance, making it well-suited for a wide range of

practical applications in human tracking and detection.

Publication:

1. Edward Andrew Carlson, "dynamic tracking tracking of multiple people", Master Thesis, RPI

2. Andrew Janowczyk, "Adaptive background modeling for human tracking", Master Thesis, RPI

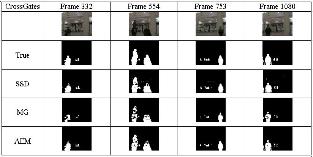

1. Comparison(click image to enlarge)

|

|

||||||||

|

|

||||||||

Adaptive Expectation Maximization (Our Method)

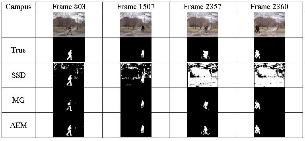

| Campus Tracking |

Video taken from the RPI campus, in real-time we identify the targets and draw boxes around them |

| Crossgates Mall Tracking |

Video taken from the Crossgates Mall, in realtime we identify the targets and draw boxes around them |

| Background Modeling Demo |

This output is an attempt at modeling the background of the scene. The scene starts off in a training mode and then switches over to a red = background green = foreground mode. The thing to note is that the cereal box is identified as foreground initially but after a certain amount of time it is added into the background |

| Combined Background + particle filtering Demo |

We can see that when we go to the red=background, green=foreground mode, with the new background modeling implemented we detect that the cereal box and plant are background and over time naturally add them in to the scene. |

| Fountain (background+ particle filtering) |

Here we can see the benefits of the integration of background modeling with particle filtering. It becomes trivial to track people, even in front of moving objects such as the fountain. |

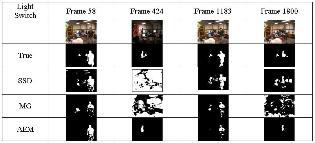

| Light Switch Tracking (background+particle filtering) |

Here we can see the benefits of having a background modeler that allows the scene to be in multiple states. We can easily track a person even when the light switches from on to off |