Brain computer interface (BCI) is a communication technique that enables people to interact with the outside world using brain signals without performing body movement. With better prediction of people’s intention of movement, BCI can not only help improve the motor capability of people with handicap but also enhance the performance of normal people. The analysis of brain signals is crucial for BCI technique. In collaboration with Dr. Gerwin Schalk from Wadsworth Center, New York State Department of Health, we primarily analyze and model Electrocorticographic (ECoG) signals recorded from human subject with electrodes placed subdurally due to clinical purpose. We apply and develop probabilistic graphical model to characterize the signal progression pattern of ECoG signals in space and time. This approach not only provides an intuitive interpretation for spatio-temporal relationships among different brain areas, but also captures the temporal variation within brain signals, which is not considered sufficiently in conventional methods.

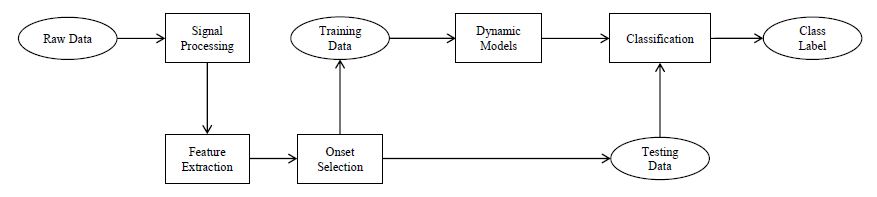

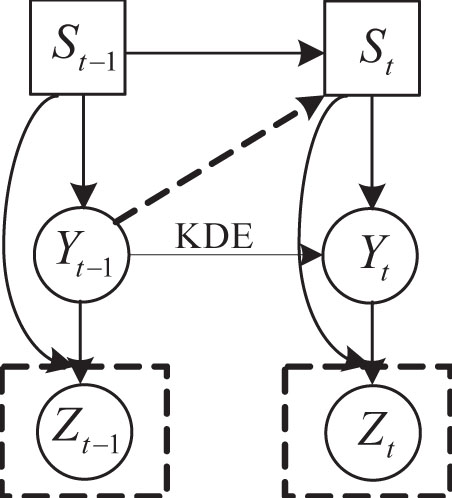

This research models the spatio-temporal dependencies among different channels of ECoG signals using different variants of Dynamic Bayesian Networks (DBNs). Instead of modeling the raw signals, we model the features obtained by spectral filtering, which are amplitude time course of certain frequency bands based on neural science and engineering research.

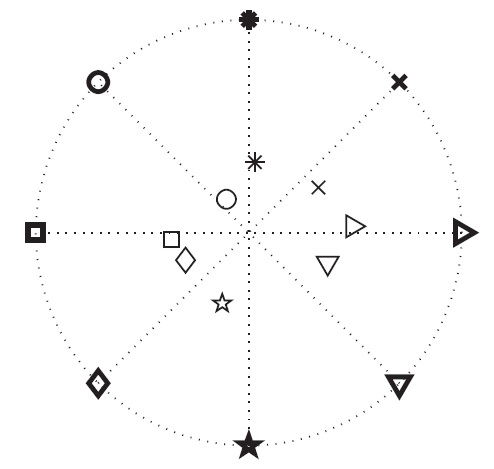

During the motor task, the human subject held a joystick to move a cursor appearing on the screen to hit virtual target. There are eight possible locations of the target. The goal is classify the directions of the movement by using only the multi-channel ECoG signals. We applied three variants of DBN namely Autoregressive, HMM and coupled-HMM. According to the preliminary results in a two-channel experiment, coupled-HMM outperforms the other two competitors in most of the cases.

Determining a person’s intent, e.g., where and when to move, from brain signals rather than from muscles would have important applications in clinical or other domains. For example, detection of the onset and direction of intended movements may provide the basis for restoration of simple grasping function in people with chronic stroke, or could be used to optimize a user’s interaction with the surrounding environment.

Determining a person’s intent, e.g., where and when to move, from brain signals rather than from muscles would have important applications in clinical or other domains. For example, detection of the onset and direction of intended movements may provide the basis for restoration of simple grasping function in people with chronic stroke, or could be used to optimize a user’s interaction with the surrounding environment.

Detecting the onset and direction of actual movements is the first step in this direction. In this study, we demonstrated that we can detect the onset of intended movements and their direction using ECoG signals. The information encoded in ECoG about these movements can be used to improve performance in a targeting task. SVM is applied for onset prediction and a novel modified time-varying dynamic Bayesian network (MTVDBN) is proposed for direction prediction. The model can be applied to other practical applications in everyday life. For example, it may support faster braking during vehicle operation or more raphid targeting in military applications.

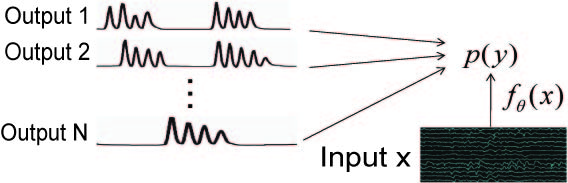

This research addresses two fundamental issues in decoding: better generalization and better features. To achieve better generalization, we propose to incorporate anatomic and kinematic knowledge into the decoding process. This represents a shift from the traditional data-driven decoding methods. Incorporating generic knowledge into the decoding not only improves the generalization performance across subjects and across trials but also reduce dependence on training data. For better features, we propose a deep learning framework to learn features for specific BCI tasks. This deviates from the current practice that mainly uses manually defined brain signal features. Such learnt features are more optimal for the specific BCI tasks, and therefore lead to better decoding performance.

Most existing regression/classification algorithms are data-driven, ignoring various constraints in the application domain, even though in many domains these constraints can be easily obtained and have potential to substantially benefit the performance of regression/classification. For example, in BCI the movement of an external device like a prosthetic limb is subject to the related anatomical and kinematic constraints. The existing decoding algorithms ignore these constraints. We propose a Bayesian decoding model to explicitly capture the constraints and to combine them with brain measurements, yielding a significant improvement in decoding accuracy. Specifically, we exploit the constraints that govern finger flexion and incorporate these constraints into a prior model on finger movement. The improved finger flexion decoding is then achieved by combining the prior model with the ECoG signals.

Most existing regression/classification algorithms are data-driven, ignoring various constraints in the application domain, even though in many domains these constraints can be easily obtained and have potential to substantially benefit the performance of regression/classification. For example, in BCI the movement of an external device like a prosthetic limb is subject to the related anatomical and kinematic constraints. The existing decoding algorithms ignore these constraints. We propose a Bayesian decoding model to explicitly capture the constraints and to combine them with brain measurements, yielding a significant improvement in decoding accuracy. Specifically, we exploit the constraints that govern finger flexion and incorporate these constraints into a prior model on finger movement. The improved finger flexion decoding is then achieved by combining the prior model with the ECoG signals.

Learning with Target Prior is a new learning scheme, in which the prior knowledge about the target variable and the uncorresponded data are used to train the parametric function that maps data to target variables. It is applied to decoding problem in BCI and body pose estimation problem in computer vision.

Learning with Target Prior is a new learning scheme, in which the prior knowledge about the target variable and the uncorresponded data are used to train the parametric function that maps data to target variables. It is applied to decoding problem in BCI and body pose estimation problem in computer vision.

We developed a framework which learns deep features by incorporating prior knowledge about the target variables. Features learned with this method will keep a balance between generality and task specificity.

Rui Zhao, Gerwin Schalk, Qiang Ji. Temporal Pattern Localization using Mixed Integer Linear Programming. 24th International Conference on Pattern Recognition (ICPR), 2018. [PDF]

Rui Zhao, Gerwin Schalk, Qiang Ji. Robust Signal Identification for Dynamic Pattern Classification. 23rd International Conference on Pattern Recognition (ICPR), 2016. [PDF]

Rui Zhao, Gerwin Schalk, Qiang Ji. Coupled Hidden Markov Model for Electrocorticographic Signal Classification. 22nd International Conference on Pattern Recognition (ICPR), 2014. [PDF]

Zuoguan Wang, Siwei Lyu, Gerwin Schalk, Qiang Ji. Deep Feature Learning using Target Priors with Applications in ECoG Signal Decoding for BCI. International Joint Conferences on Artificial Intelligence (IJCAI) 2013, Oral Presentation. [PDF]

Zuoguan Wang, Siwei Lyu, Gerwin Schalk, Qiang Ji. Learning with Target Prior. Annual Conference on Neural Information Processing Systems (NIPS) 2012. [PDF]

Zuoguan Wang, Aysegul Gunduz, Peter Brunner, Anthony L. Ritaccio, Qiang Ji and Gerwin Schalk, Decoding onset and direction of movements using electrocorticographic (ECoG) signals in humans. Frontiers in Neuroengineering, 2012. [PDF]

Zuoguan Wang, Gerwin Schalk, Qiang Ji. Anatomically Constrained Decoding of Finger Flexion from Electrocorticographic Signals. Annual Conference on Neural Information Processing Systems (NIPS) 2011. Spotlight presentation- top 5%. [PDF]

Zuoguan Wang, Qiang Ji, Kai J. Miller, and Gerwin Schalk, Prior Knowledge Improves Decoding of Finger Flexion from Electrocorticographic (ECoG) Signals, Frontiers in Neuroscience, 2011. [PDF]

Ryan M. Hope, Ziheng Wang, Zuoguan Wang, Qiang Ji and Wayne D. Gray. Workload Classification Across Subjects Using EEG. Proceedings of the Human Factors and Ergonomics Society Annual meeting, 2011. [PDF]

Ziheng Wang, Ryan M. Hope, Zuoguan Wang, Qiang Ji and Wayne D. Gray. An EEG Workload Classifier for Multiple Subjects. IEEE Annual International Conference on Engineering in Medicine and Biology (EMBC), 2011. [PDF]

Ziheng Wang, Ryan Hope, Zuoguan Wang, Qiang Ji, and Wayne Gray. Cross-Subject Workload Classification with a Hierarchical Bayes Model. NeuroImage, 2011. [PDF]

Zuoguan Wang, Qiang Ji, Gerwin Schalk, Kai J. Miller, Decoding finger flexion from Electrocorticographic signals using sparse Gaussian process, International Conference on Pattern Recognition, 2010. [PDF]