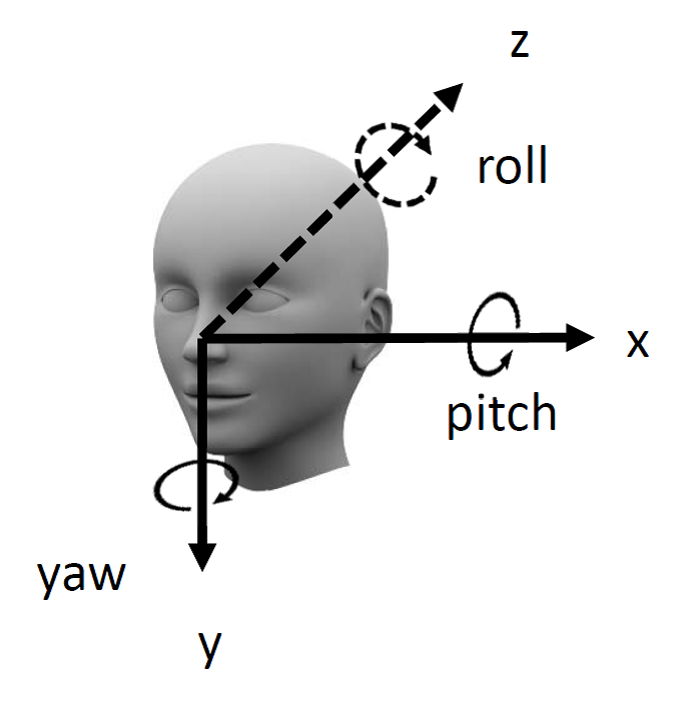

Head pose estimation methods aim to estimate the relative head orientations and positions with respect to the camera coordinate from facial images. The estimated pose angles could be coarse-level poses (e.g., left pose, frontal, right pose) or fine-level poses (e.g., pitch, yaw and roll angles as shown in Figure 1).

Figure 1. Head pose rotation angles: pitch, yaw and roll.

Head pose estimation methods can be classified into learning-based approaches and model-based approaches. The learning-based methods directly learn the mapping between image appearance and pose angles, while the model-based methods link the 3D model and the 2D observation (e.g., 2D facial landmark locations) using the projection model.

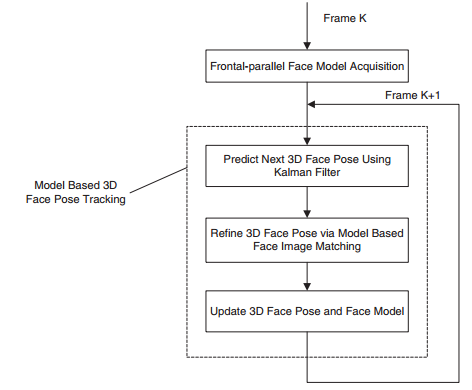

In the first set of works [1-3], we focus on the learning-based methods. In the early work [1], the wavelet features are used to describe the face image and principal component analysis technique is used to construct the face space. The pose angles are determined by the PCA space coefficients. In [2-3], face poses are estimated through the projection of a updated reference face. The face pose is then tracked across frames using the Kalman Filtering method (Figure 2).

Figure 2. Flowchart of the face pose tracking method [2-3].

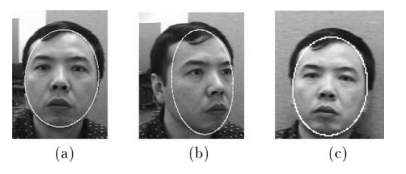

In the second set of works [4-10], we focus on the model-based approaches. All the model-based methods rely on the perspective projection (e.g., weak perspective projection) that projects the 3D shape into 2D. In the early works [4-5], the geometric features from eye locations can be used for pose estimation. In [6-8], the 2D face is represented as an ellipse and pose estimation is performed based on the shape of the ellipse (Figure 3). In [9-10], pose estimations are based on the tracked facial landmark locations. In [9], weak perspective projection and 3D deformable model is used for pose estimation. In [10], six rigid facial landmarks are tracked across frames, and the pose parameters are estimated based on a general 3D facial shape model.

Figure 3. Face shape distortions due to face orientation and perspective projection.

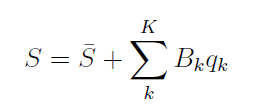

In more recent work, we improve over the landmark-based pose estimation methods, and we propose to simultaneously recover the head pose and the 3D facial shape. First, to capture the 3D shape variations, we learn a 3D deformable shape model using the principal component analysis technique. Then, for each 3D shape S, it can be represented as a linear combination of an average 3D shape and a few basis.

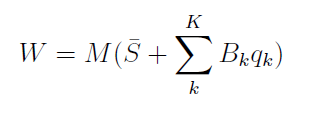

Then, given the weak perspective projection matrix, we can link the 3D model and 2D facial landmark locations as follows:

Here, W is the observation matrix that is determined by the detected 2D facial landmarks on the image. M is the weak perspective projection matrix that is determined by the head poses. For simultaneous pose and deformation estimation, we can formulate the following optimization problem:

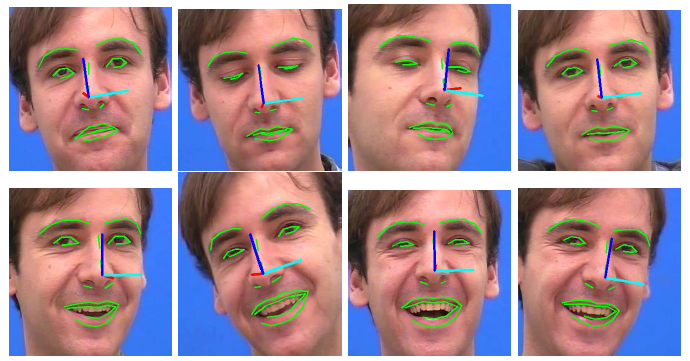

To solve this problem, we can alternately solve the motion matrix M and 3D deformable model coefficients q. From M, we can further estimate the head pose angles. Experimental results demonstrate the effectiveness of the proposed method (Figure 4).

Figure 4. Head pose estimation results on talking face video sequence.

[1] Mukesh C. Motwani and Qiang Ji, ''3D face pose discrimination using wavelets,'' International Conference on Image Processing, 2001 PDF

[2] Zhiwei Zhu and Qiang Ji, ''Real Time 3D Face Pose Tracking From an Uncalibrated Camera,'' IEEE Conference on Computer Vision and Pattern Recognition Workshop, 2004 PDF

[3] Zhiwei Zhu and Qiang Ji, ''3D face pose tracking from an uncalibrated monocular camera,'' International Conference on Pattern Recognition, 2004 PDF

[4] Qiang Ji and Xiaojie Yang, ''Real-time eye, gaze, and face pose tracking for monitoring driver vigilance,'' Real-Time Imaging, 2002. PDF

[5] Qiang Ji and Xiaojie Yang, ''Real time 3D face pose discrimination based on active IR illumination,'' International Conference on Pattern Recognition, 2002 PDF

[6] Qiang Ji, ''3D Face pose estimation and tracking from a monocular camera,'' Image and vision computing, 2002. PDF

[7] Qiang Ji, Mauro S. Costa, Robert M. Haralick and Linda G. Shapiro, ''An integrated linear technique for pose estimation from different geometric features'', International Journal of Pattern Recognition and Artificial Intelligence, 1999 PDF

[8] Qiang Ji, Mauro Costa, Robert Haralick, and Linda Shapiro, ''An integrated linear technique for pose estimation from different features,'' Vision Interface, 1998 PDF

[9] Zhiwei Zhu and Qiang Ji, ''Robust real-time face pose and facial expression recovery,'' IEEE Conference on Computer Vision and Pattern Recognition, 2006 PDF

[10] Yan Tong, Yang Wang, Zhiwei Zhu and Qiang Ji, ''Robust Facial Feature Tracking under Varying Face Pose and Facial Expression,'' Pattern Recognition journal, 2007 PDF