Real-Time Non-invasive Human

Fatigue Monitoring

We have been working on a project (funded by Honda and AFOSR) involving developing non-invasive techniques for monitoring human fatigue. The project involves the use of a remote video cameras (possibly hidden camera) focusing on an individual's eyes and face. We propose image processing techniques to automatically analyze the video image in real time and to simultaneously extract several facial features that characterize the individual's state of mind. The visual features include eyelid movements (eye blink frequency, closure duration), eye gaze, face orientation (for detecting nodding), and facial expression (like yawning). To improve the robustness and accuracy of the system, the extracted visual cues are systematically combined, along with the available circumstantial evidences, using a probabilistic framework to generate a composite fatigue score. Here is an outline of our approach System flowchart

Our recent research efforts have led to the development of

· non-intrusive real-time computer vision techniques to extract multiple fatigue parameters related to eyelid movements, gaze, head movement, and facial expressions.

· a probabilistic framework based on the Dynamic Bayesian networks to model and integrate contextual and visual cues information for fatigue detection over time.

We have successfully integrated different components of our fatigue monitoring system, producing a prototype system (see the figures below). The prototype system, equipped with two IR cameras, can simultaneously track in real time eyelid movements (e.g. PERCLOS), gaze, facial expressions, and head pose, and produce a composite measure of human (driver) state, based on fusing different fatigue parameters. Compared with the system that uses a single visual parameter (e.g. PERCLOS), our driver monitoring and detection is hence more robust and accurate under realistic driving conditions.

Representing state of the art in real-time non-invasive fatigue monitoring, our system works rather robustly in an indoor environment for different subjects. Possible applications of our technologies include driver fatigue monitoring, human computer interaction, human factors study, etc.. We are ready to demonstrate the system to anybody who may be interested. We can send you a CD ROM that includes the latest video demo of our system. In addition, our technologies need further development to make them commercially viable. We are looking for partners to continue these developments. Please contact Dr. Qiang Ji at qji@ecse.rpi.edu or call him at (518) 276 6440.

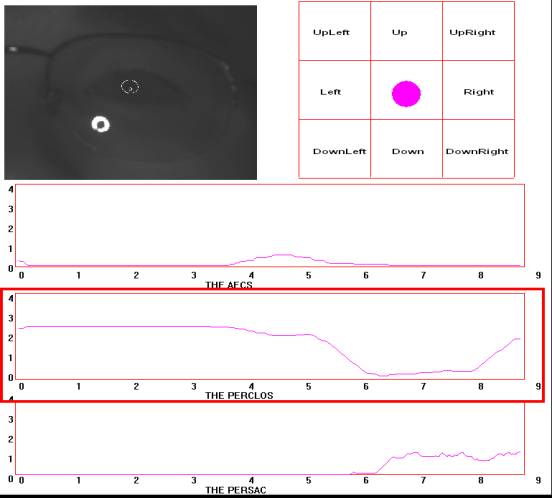

Figure 1: Real time plot eyelid and eye gaze parameters

over time. AECS represents the average

eye

closure and opening speed; PERCLOS is the percentage of

eye closure; PERSAC is the percentage

of saccade eye movements over time.

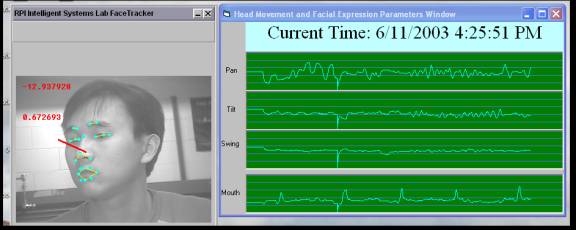

Figure 2: Real time plot of face pose parameters (pan,

tilt, and swing) and facial expression parameter (mouth)

over time.

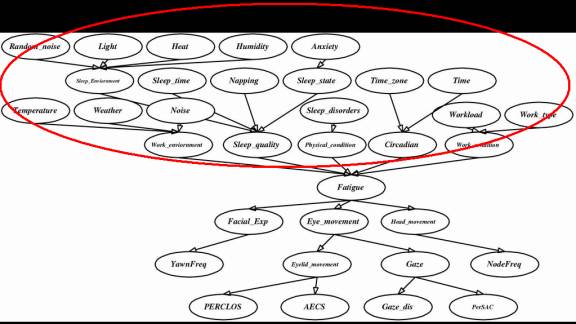

Figure 3. The Dynamic Bayesian Network fatigue model for

modeling and detecting fatigue

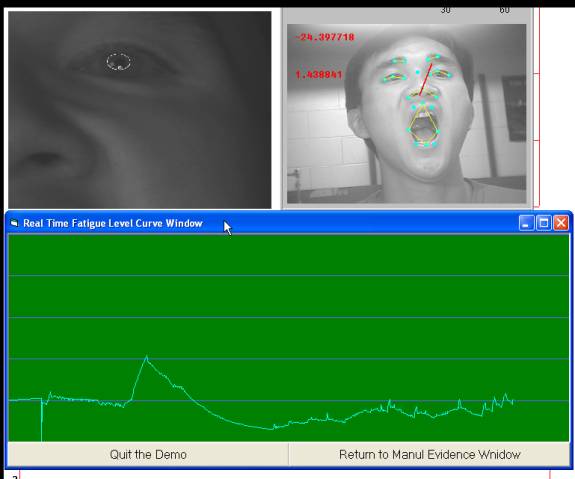

Figure 3. The

prototype system: upper left corner shows the image from the eye camera;

upper right corner shows the image of face camera; bottom

shows the real time plot of the

composite fatigue curve over time.

Figure 4. Human subjects study to validate our

fatigue monitor. The study is to

correlate the output

of our fatigue monitor with that of the TOVA (a vigilance test) and with that of EEG and EOG.

The study results are are presented below.

PERCLOS versus TOVA response

time. The two parameters are clearly

correlated almost linearly.

A larger PERCLOS measurement

corresponds to a longer reaction time.

PERCLOS

measurements for evening (blue ) and morning (red) bouts. The subject is fully alert for

the

evening session and fatigue for the morning session.

AECS

versus TOVA response time. The two

parameters are clearly correlated almost

linearly. A larger AECS measurement

corresponds to a longer reaction time.

AECS

measures average eye closure/opening speed (as measured by the time

needed

to fully close/open eyes).

AECS

measurements for evening (blue) and morning (red) bouts

PerSac versus TOVA response

time. The two parameters are clearly

correlated almost

linearly. PerSac measures percentage of saccade eye

movements over time.

A smaller PerSac measurement

corresponds to a longer response time.

PerSac

measurements for evening (blue) and morning (red) bouts

The estimated

composite fatigue index (blue) versus the normalized TOVA response time.

The two

curves track each other well.