|

Research

Goal | Eye

Tracking | Gaze

Tracking | Face

Tracking |Facial

Feature Tracking |Facial

Motion Recovery | Facial

Expression Recogntion

|

Real-Time Facial Feature Tracking Under Significant Facial

Expressions and Various Face Orientations

|

|

|

|

|

|

Facial

features, such as eyes, eyebrows, nose and mouth, and their spatial arrangement,

are important for the facial interpretation tasks based on face images,

such as face recognition, facial expression analysis and face animation.

Therefore, locating these facial features in a face image accurately is a

crucial step for these tasks to perform well. However, in reality, the

appearance of the facial features in the images varies significantly among

different individuals. Even, for a specific person, the appearance of the

facial features is easily affected by the lighting conditions, face

orientations and facial expressions, etc. Therefore, accurate facial

feature detection and tracking still remains a very challenging task,

especially under different illuminations, face orientations and facial

expressions, etc.

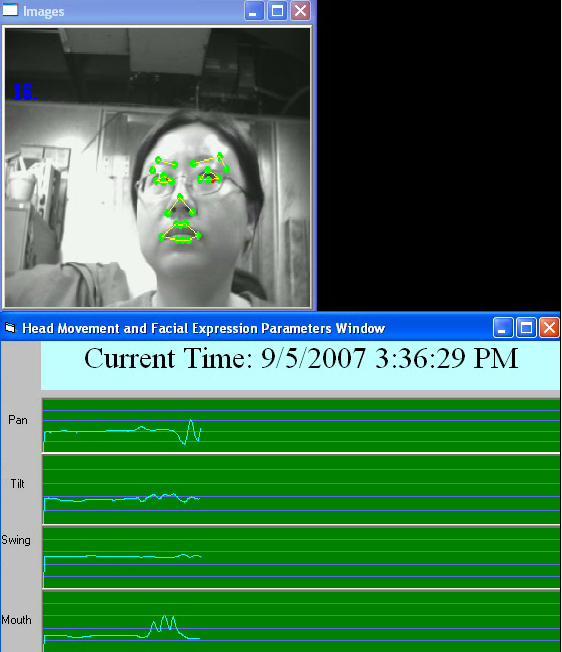

In our research, we proposed an effective approach to

detect and track twenty-eight facial features from the face images with

different facial expressions under various face orientations in real time.

The improvements in facial feature detection and tracking accuracy are resulted

from: (1) combination of the Kalman filtering with the eye positions to

constrain the facial feature locations; (2) the use of pyramidal Gabor

wavelets for efficient facial feature representation; (3) dynamic and

accurate model updating for each facial feature to eliminate any error

accumulation; (4)imposing the global geometry constraints to eliminate any

geometrical violations. By these combinations, the accuracy of the facial

feature tracking reaches a practical acceptable level. Subsequently, the extracted

spatio-temporal relationships among the facial features can be used to

conduct the facial expression classification successfully.

|

|

Facial Feature and Pose Tracking based on 3D Deformable Models

|

|

|

|

|

|

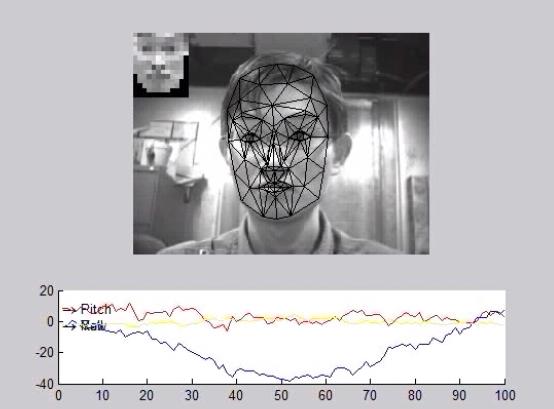

In our recent

work, we compared our method with 3D tracking method based on the Candide-3 face model and

particle filter. The 3D deformable face model can be manually initialized

or be automatically initialized at the first frame with 28 detected facial

feature points detected by our 2D tracker. Demo 5 gives

the 3D tracking result. Demo 6 shows

the comparison of 2D and 3D tracker and indicates that the 3D tracker can

also reliably track 28 salient facial feature points, and provide more

other facial feature points like those on the cheek. Even though currently

we only take into account the rigid motion, the Candide-3 face model also

allows non-rigid motion and the Action Unit

(AU) parameters of this model can be directly applied for expression

analysis. It can be seen from Demo 6 that

facial feature tracking and pose estimation result of the 3D tracker are

not as smooth as those output by our 2D tracker, this can be explained by

the fact that this 3D tracker is purely global feature based (in the 3D

tracking method, the whole face will be warped with the estimated

parameters of Candide-3 into geometrically-free facial patch, and then

compared with a template to output the likelihood), while our 2D tracker is

also based on local search.

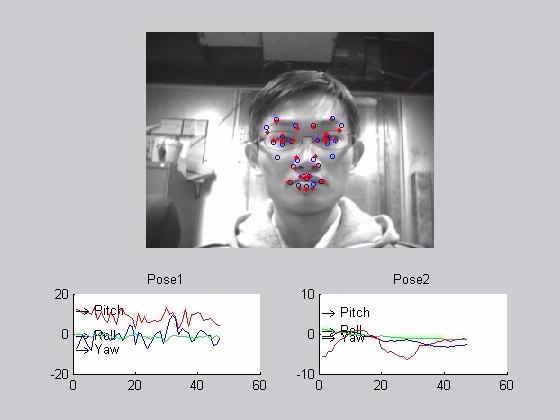

We also

tried directly instantiating the 3D face model with 28 facial feature

points provided by the 2D tracker at each frame. The benefits of doing this

include providing more facial feature points and the AU parameters. The

result is shown in Demo 7. Exploiting

the cooperation between 2D and 3D face model would be one of our future

works.

|

|

|

|

|

|

Publications:

|

|

(1) Yan Tong and Qiang Ji, “Automatic Eye Position Detection

and Tracking under Natural Facial Movement”, Passive Eye Monitoring,

Editor: Riad I. Hammoud, pp. 67-84, Springer 2007.

(2) Yan Tong, Yang Wang, Zhiwei Zhu, and Qiang Ji, “Robust Facial Feature

Tracking under Varying Face Pose and Facial Expression”, Pattern

Recognition, Vol. 40, No. 11, pp. 3195-3208, November 2007.

(3) Zhiwei Zhu and Qiang Ji, Robust Pose Invariant Facial Feature Detection

and Tracking in Real-Time, the 18th International Conference on Pattern

Recognition (ICPR), Hongkong, August, 2006.

(4) Yan Tong, Yang Wang, Zhiwei Zhu, and Qiang Ji, “Facial Feature Tracking

using a Multi-State Hierarchical Shape Model under Varying Face Pose and

Facial Expression”, the 18th International Conference on Pattern

Recognition (ICPR), Hongkong, August, 2006.

(5) Yan Tong and Qiang Ji, “Multiview Facial Feature Tracking with a

Multi-modal Probabilistic Model”, the 18th International Conference on

Pattern Recognition (ICPR), Hongkong, August, 2006.

|

|

Demos:

|

|

|

|

|

|

|

|

|

|

Demo.1 Real-time facial feature

tracking demo (short version)

|

Demo.2 Real-time facial Feature

tracking demo (long version)

|

Demo.3 Real-time facial feature

tracking demo with estimated 3D head pose

|

|

|

|

|

|

|

|

Demo.4

Real time

facial feature tracking under large pose and scale changes

|

|

|

|

Latest Demos:

|

|

|

|

|

|

Demo.5 3D tracking based on the Candide-3 face model

(Initialized by 28 detected facial feature points), Bottom is the estimated

pose, top left is the geometrically-free face template

|

Demo.6 Comparison of 2D and 3D facial feature/pose tracking

(Red/Blue circles represent results of 2D/3D tracker, Bottom left/right is

the estimated pose by 3D/2D tracker)

|

Demo.7 3D face model fitting with facial feature points

provided by 2D tracker (Top right is the warped face, the bar graph at

bottom right indicates the intensities of AUs)

|

|