Automatic Human Behavior Tracking and Analysis

chenj4@rpi.edu

-

Developed a gaze tracking system using stereo camera and two IR

lights that achieves accuracy

less than one degree with one-time calibration. Link (Collaborate with Prof. Wayne Gray in Cognitive Science. )

- Development of a gaze tracker based on eye corners. This system only uses one camera and doesn't need IR lights.

New Update: New probabilistic gaze estimation algorithm which can estimate eye parameters and eye gaze without personal calibration. (This work is accepted at CVPR 2011. Link)

Face Tracking and Facial Feature Tracking System

- Development of a robust tracking algorithm which tracks the face region in the videos.

- Development of facial feature tracking algorithm under large scale and pose changes. (demo)

- Development of various facial feature tracking software, including facial feature tracker for animation (work with Mobinex, Inc) and facial feature tracker for thermal video (work with . Li Creative Technologies (LcT), Inc ).

|

Upper-body tracking (Link) |

New Update: We propose a new method to learn the prior model of body pose from various generic constraints. This link will be updated soon.

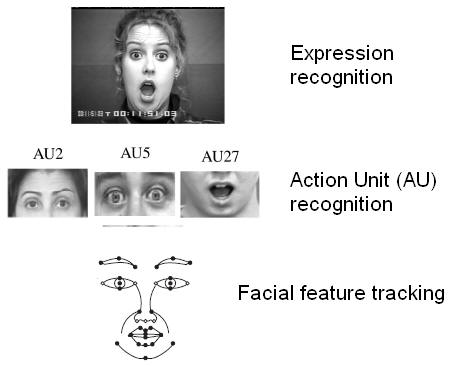

Facial activity recognition and facial expression analysis

- Development of a dynamic Bayesian network for systematically modeling rigid and non-rigid facial motions, their interactions on 2D facial shapes, and the uncertainties with their image observations as well as the dynamic characteristics of facial activity.

- Development of a hierarchical framework for simultaneous facial feature tracking and facial activity recognition.

Other Projects

Facial tracking with 3D mesh (CANDIDE-3)

Automatic Face Animation (Face Transfer)

It is used to transfer the expression from "surprised Jixu" to "surprised Bond" :).

Reference: VLASIC, D., BRAND, M., PFISTER, H., AND POPOVIC, "Face Transfer with Multilinear Models", ACM Transactions on Graphics, 2005.

New: Matlab Code for CANDIDE-3 face transfer.

Old works (before 2006)

Ø 06/2005¨C08/2005 Internship in WSM (Web Search & Mining) Group, Microsoft Research Asia. (Most of my work was done with Speech Group and directed by Dr. Frank Soong.)

Apply automatic speech segmentation in voice activity detection(VAD) and isolate word recognition. Build an isolate word recognition demo system on smartphone based on DTW and auto-segmentation algorithm

Ø 09/2003¨C07/2005 Research assistant, University of Science & Technology of China.

Develop speaker verification system. This system participated the NIST04 and NIST05 Speaker Recognition Evaluation (SRE), and ranked 5th among 23 systems, competing with MIT,SRI,etc

Book Chapters

Lei Zhang, Jixu Chen, Zhi Zeng, Qiang Ji, A Generic Framework for 2D and 3D Upper Body Tracking, in "Machine Learning for Human Motion Analysis: Theory and Practice".(Eds.) Liang Wang, Li Cheng, and Guoying Zhao. pp. 133-151, IGI press, 2010

Yongmian Zhang, Jixu Chen, Yan Tong, Qiang Ji, Spontaneous Facial Expression Analysis and Synthesis for Interactive Facial Animation, accepted as a chapter in "Computer Vision for Multimedia Applications: Methods and Solutions.", IGI press

Journal

Yan Tong, Jixu Chen and Qiang Ji, A Unified Probabilistic Framework for Spontaneous Facial Action Modeling and Understanding, IEEE Transactions on Pattern Analysis and Machine Intelligence, p258-274, Vol. 32, No. 2, February,2010 (pdf)

Conference

Jixu Chen and Qiang Ji, Probabilistic Gaze Estimation Without Active Personal Calibration, Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2011. (pdf)

Jixu Chen and Qiang Ji, A Hierarchical Framework for Simultaneous Facial Activity Tracking, Automatic Face and Gesture Recognition (FG), 2011. (pdf)

William Maio, Jixu Chen, and Qiang Ji, Constrained-Based Gaze Estimation Without Active Calibration, Automatic Face and Gesture Recognition (FG), 2011.

Jixu Chen and Qiang Ji, Efficient 3D Upper Body Tracking with Self-Occlusions, International Conference on Pattern Recognition, 2010.

Jixu Chen, Minyoung Kim, Yu Wang, and Qiang Ji, Switching Gaussian Process Dynamic Models for Simultaneous Composite Motion Tracking and Recognition, IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2009. (pdf)

Jixu Chen, Wayne Gray, and Qiang Ji, Eye tracking, A Robust 3D Eye Gaze Tracking System using Noise Reduction, Eye Tracking Research & Applications Symposium 2008. (pdf)

Jixu Chen and Qiang Ji, 3D Gaze Estimation based on Eye Corners with a Single Camera, International Conference on Pattern Recognition, 2008.

Lei Zhang, Jixu Chen, Zhi Zeng, and Qiang Ji, 2D and 3D Upper Body Tracking with One Framework, International Conference on Pattern Recognition, 2008.

Jixu Chen and Qiang Ji, Online Spatial-temporal Data Fusion for Robust Adaptive Tracking, IEEE workshop on Online Learning for Classification in conjunction with IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2007.(pdf)

Jixu Chen, Dai Beiqian, Sun Jun. Prosodic Features Based on Wavelet Analysis for Speaker Verification,Proceedings of Interspeech2005(Eurospeech),Lisbon,Portugal

Jixu Chen, Liu Minghui, Dai Beiqian, Li Hui. A New Method of Score Normalization for Text-Independent Speaker Verification, Signal Processing 2006 (Chinese)